Refraction and an Unbiased (read “fixed”) lighting model

December 13, 2013 - 12:39 am by Joss Whittle C/C++ Graphics PhD University

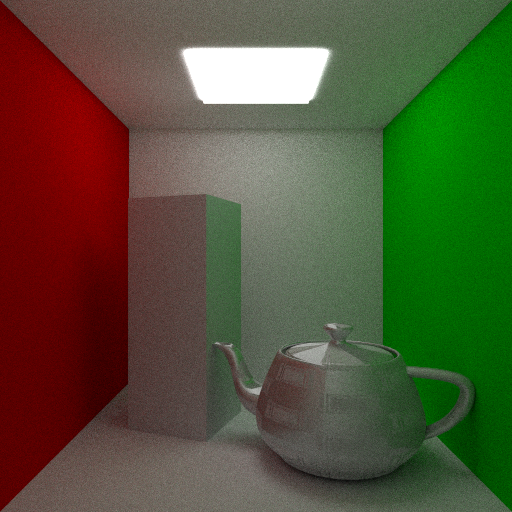

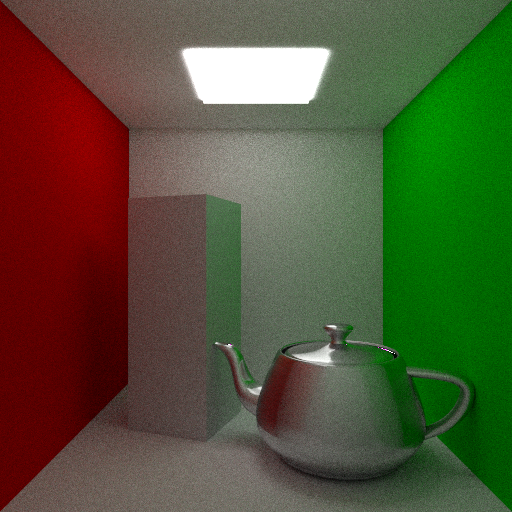

I thought I’d make some high quality comparison shots of some of the shaders I’ve been working on. Above you can see a side by side comparison of a perfect mirror BRDF and a Phong based BRDF with exponents of one million and ten for the centre and right images respectively.

While the Phong-like BRDF does give nice glossy reflections at a relatively small cost it does not model a physically accurate shader. I’m currently looking into implementing a Cook–Torrance model shader which while being more time consuming to compute gives nice results which are based off of physical phenomena. The Cook–Torrance shader also has the inherent ability to model microfacets within the surface of objects which is a big plus.

Because the Phong BRDF is not physically accurate I needed to find a PDF that would look correct in the renderings. The Mirror BRDF for instance has a PDF = 1 and the Diffuse BRDF has a PDF = 2 * (normal . newDirection). After some experimenting from reading a paper that claimed the Phong marginal pdf was cos(theta)^e which I understood (possibly incorrectly as it did not work) to mean PDF = (normal . newDirection)^e I found (gave up) that the Diffuse BRDF in fact worked reasonably well for the Phong model.

Edit I have found a better paper with what looks like a good Phong model. They even give a run down of using their model in a Monte Carlo style simulation with a marginal PDF. I might try implementing this before I move onto Cook-Torrance.

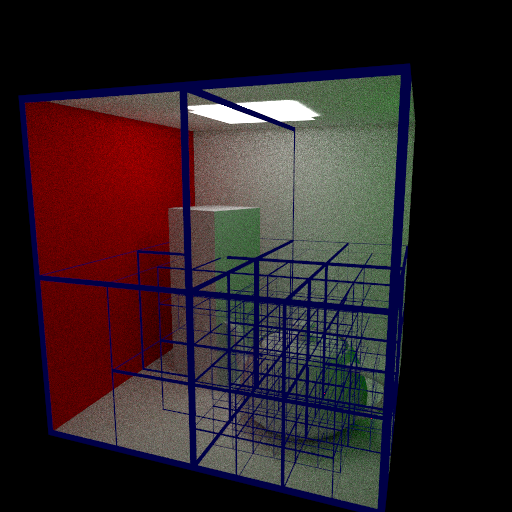

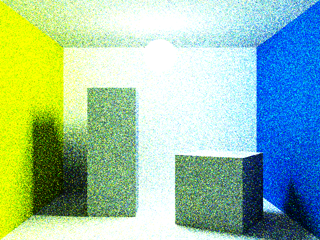

Previously I’ve debugged my KD-trees only by looking at archaic console printouts where at best each descending node in the structure is tabbed a couple of spaces further in from it’s parent.

However, seeing as I’m currently building a new renderer it seemed apt to build in a method for visualizing the generated trees. This was pretty simple to add in, if a preprocessor flag __AABB_EDGE__ is defined at compile time then during tree traversal additional code will run which does a simple UV coordinate calculation for the Axis Aligned-Bounding Box; if the UV is close enough to the edge of the box then the current pixel is abandoned and set to plain blue. The result is quite interesting to view and because of the power of the C++ preprocessor when it is disabled it effectively doesn’t exist in the compiled executable, meaning it has no effect on performance.

I have also been playing with specular and normal mapping. It was trivial to add due to the design of the shader class this time. In the case of specular mapping the max value of each RGB tuple is taken as the value for the map on the [0,1] range; this is then used to linearly scale the specular exponent of the shader which is used to calculate the glossy reflection for surfaces with an exponent > 0. Below a checker-board texture was used to scale a specular exponent of 128 into regions of 0 and 128 over the surface of the teapot.

Today has been a pretty good day, both for me and for my work. For the last couple of weeks I’ve been working from home because all I am doing lately is background reading and coding my new renderer. At first it was nice to know that my workspace was only a commando roll (or a fall) out of bed away from me; but after 3 weeks it just got to be a bit much. Don’t take this to mean I didn’t leave the house for three weeks, I did, but working long hours from the comfort of my room did dramatically take its toll on me.

But enough about me going mad in the house! Today I came into the office (which I think I’ll start doing a lot more often) and have made great progress on my new C++ render!

It’s still not perfect, far from it in fact, but it’s progress none the less. I’ve been reading a lot lately about Metropolis Light Transport, Manifold Exploration, Multiple Importance Sampling (they do love their M names) and it’s high time I started implementing some of them myself.

So it’s with great sadness that I am retiring my PRT project which began over a year ago, all the way back at the start of my dissertation. PRT is written in Java, for simplicity, and was designed in such a way that as I read new papers about more and more complex rendering techniques I could easily drop in a new class, add a call to the render loop, or even replace the main renderer all together with an alternative algorithm which still called upon the original framework.

I added many features over time from Ray Tracing, Photon Mapping, Phong and Blinn-Phong shading, DOF, Refraction, Glossy Surfaces, Texture Mapping, Spacial Trees, Meshes, Ambient-Occlusion, Area Lighting, Anti-Aliasing, Jitter Sampling, Adaptive Super-Sampling, Parallelization via both multi-threading and using the gpu with OpenCL, Path Tracing, all the way up top Bi-Directional Path Tracing.

But the time has taken it’s toll and too much has been added on top of what began as a very simple ray tracer. It’s time to start anew.

My plans for the new renderer is to build it entirely in C++ with the ability to easily add plugins over time like the original. Working in C++ gives a nice benefit that as time goes by I can choose to dedicate some parts of the code to the GPU via CUDA or OpenCL without too much overhead or hassle. For now though the plan is to rebuild the optimized maths library and get a generic framework for a render in place. Functioning renderers will then be built on top of the framework each implementing different feature sets and algorithms.

Yup, the PhD is going that well…

I joke. So far it’s just been a lot of reading papers on graphics, most of which I do not understand. :(

Anyway, as a fun little side project I’ve been working on a 3D Ray Caster using my old favourites, OpenCL, OpenGL, and C++. It’s quite similar in concept to the renderer for my dissertation project last year but with a simplified rendering method and faster performance.

The goal for this project is to revisit Voxel Rendering which I played around with over the summer, and possibly to revisit game development with a new version of my Aliens First-Person Pacman game.

Currently the program has seven hardcoded Axis Aligned Bounding Boxes (AABB’s) which it renders as the camera orbits around them. I’m working on a method to organise AABB’s into a flat packed Oct-Tree which can be passed to the GPU. Once this is working it should be trivial to construct an AABB Oct-Tree of the CT Scanned skull I used before, or to construct a simple game.

Another thing I may look into is modifying the Aliens game to have textured floors and ceilings using floor-casting and possibly to have maps with multiple vertical levels.

So today I thought I’d try my hand at Voxel rendering. Rather than existing in a 2D image plane with an X & Y coordinate Voxels exist in 3D space with a position in the X, Y, & Z axes. A prime example of the use of voxels in rendering is the popular game Minecraft. In Minecraft randomly generated terrain is modelled as large fields of voxels with each voxel rendered as a textured cube. Because the terrain is not just a surface coated in triangles but rather a volume with internal data, the terrain in minecraft can be modified and changed during runtime with little effect on performance.

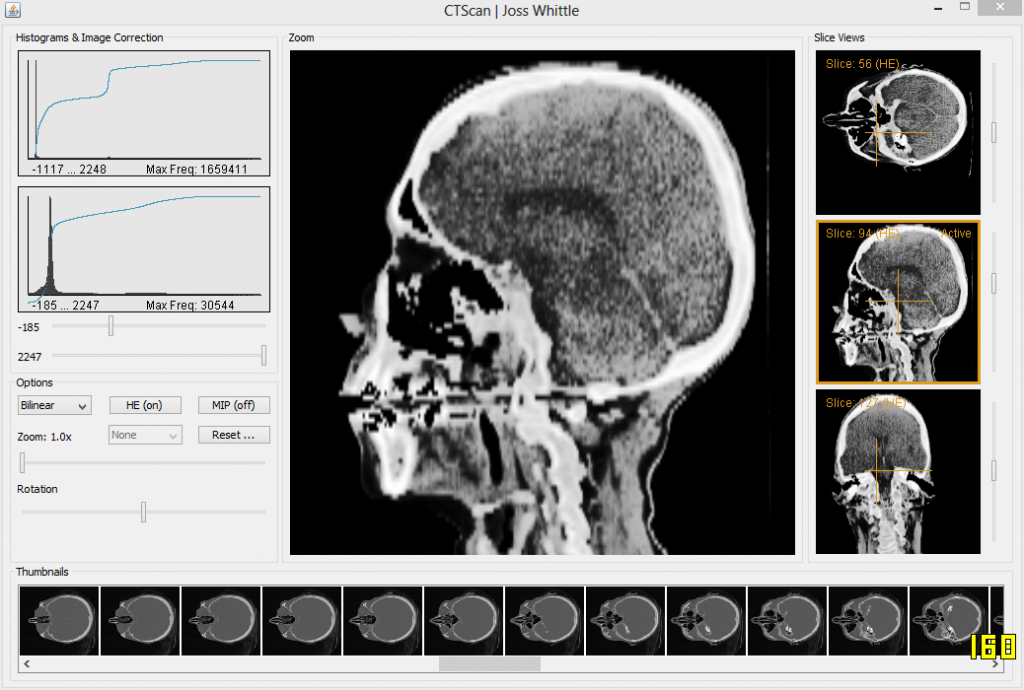

Voxels are useful for displaying other kinds of volume data too. CT Scans are medical images which capture 3D density plots of a patient’s body. By capturing density at each location throughout the scanned tissue the data can be replayed using a computer to literally look inside the body and see where possible irregularities and illness may reside.

In my second year at university I was given a Computer Graphics coursework to read in and display the data for a CT Scan. It was very low resolution, only 256 * 256 * 113 voxels in size but it worked well for the purposes of the coursework which was simply to demonstrate the ability to parse the volume data into usable slices (not that hard). There were several bonus sections to the project such as histogram equalization and density bounding to eliminate tissue we didn’t care about. Another one of the bonuses was for rendering the volume data in 3D with a hint of using trilinear interpolation. At the time the work load we were under was horrendous due to the year long group engineering project, so while I eagerly completed all of the other bonuses I just didn’t have time to get 3D working. Until now.

It’s not pretty, and in fact it’s really not pretty when you make it 3D due to the low input resolution of the scan. But there it is! The same CT Scan now rendered in 3D in OpenGL. I used the Java bindings for OpenGL (JOGL) so I could use the same loader code I wrote in 2nd year to parse the binary CT file into a 3D array of shorts.

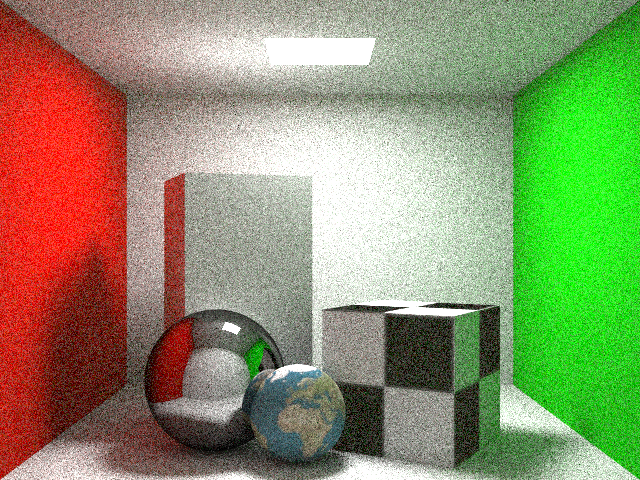

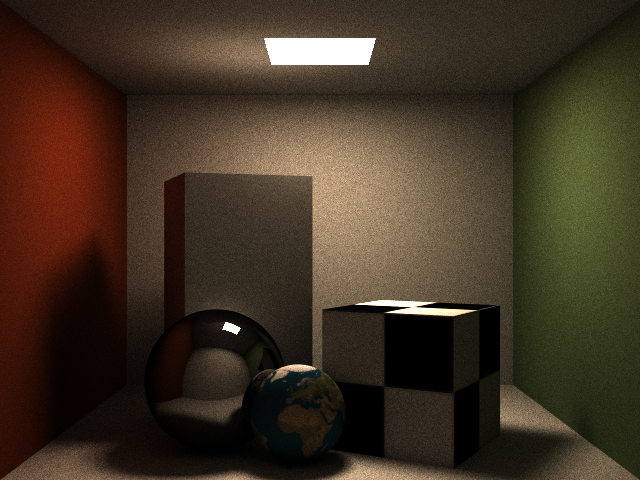

So I’m back to working on this again.. It’s a lot better than before but it’s not perfect. I’m still using unweighted samples during path generation and I am still generating a fresh path (highly randomized in path traced -> light traced construction) for every sample. Because of this the noise that still persists in the image above will never feasibly go away. Today I plan to look at “Multiple Importance Sampling” a lot more in depth.

After a minor crisis that I am horribly unprepared to start my PhD in October I thought I’d try to delve back into the world of graphics with something simple (ha). I decided to try and extend my Java Path Tracer to support a rendering mode for Bi-Directional Path Tracing. Something which I now realize is easier said than done. Ideally if I had had the time I would have introduced Bi-Directional Path Tracing (BDPT) to my dissertation project last year; however, I simply never had the time to wrap my head around all the mathematics so I left it out.

The image above was rendered at 640 x 480 but I have downsampled the image here to 320 x 240 to make up for the fact I was only able to render 100 samples before my computer BSOD’d. That’s right, because if you can make a Java program cause a BSOD then you know something is terribly amiss. My theory is that it’s simply too much recursion for the JVM. Even though I am running the 64bit version with an increased memory quota the stack for function calls is still (annoyingly) a memory limited 32bit stack. And seeing as this is just a toy project I am working on whenever I start to panic about next year it doesn’t seem worth spending the time to convert the whole thing to an iterative renderer from a recursive one.