November 6, 2013 - 4:13 pm by Joss Whittle

C/C++ GPGPU Graphics Java L2Program PhD

It’s still not perfect, far from it in fact, but it’s progress none the less. I’ve been reading a lot lately about Metropolis Light Transport, Manifold Exploration, Multiple Importance Sampling (they do love their M names) and it’s high time I started implementing some of them myself.

So it’s with great sadness that I am retiring my PRT project which began over a year ago, all the way back at the start of my dissertation. PRT is written in Java, for simplicity, and was designed in such a way that as I read new papers about more and more complex rendering techniques I could easily drop in a new class, add a call to the render loop, or even replace the main renderer all together with an alternative algorithm which still called upon the original framework.

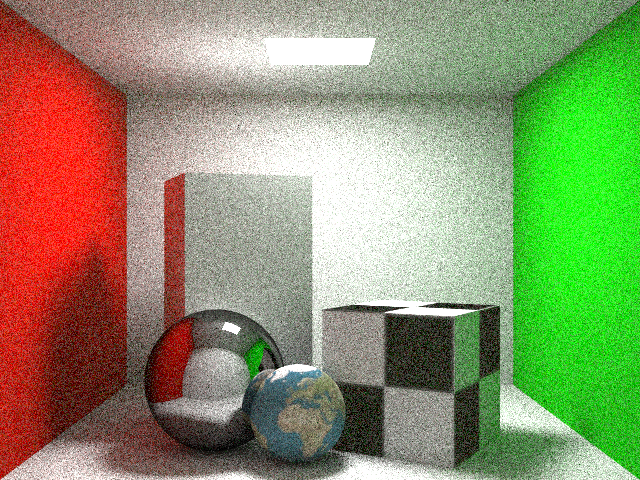

I added many features over time from Ray Tracing, Photon Mapping, Phong and Blinn-Phong shading, DOF, Refraction, Glossy Surfaces, Texture Mapping, Spacial Trees, Meshes, Ambient-Occlusion, Area Lighting, Anti-Aliasing, Jitter Sampling, Adaptive Super-Sampling, Parallelization via both multi-threading and using the gpu with OpenCL, Path Tracing, all the way up top Bi-Directional Path Tracing.

But the time has taken it’s toll and too much has been added on top of what began as a very simple ray tracer. It’s time to start anew.

My plans for the new renderer is to build it entirely in C++ with the ability to easily add plugins over time like the original. Working in C++ gives a nice benefit that as time goes by I can choose to dedicate some parts of the code to the GPU via CUDA or OpenCL without too much overhead or hassle. For now though the plan is to rebuild the optimized maths library and get a generic framework for a render in place. Functioning renderers will then be built on top of the framework each implementing different feature sets and algorithms.

Tags

Bi-Directional Path Tracing, Concurrency, CUDA, Depth of Field, Global Illumination, GPGPU, Monte Carlo Integration, OpenCL, Path Tracing, Photon Mapping, Ray Tracing

October 24, 2012 - 2:45 pm by Joss Whittle

Dissertation Graphics Java University

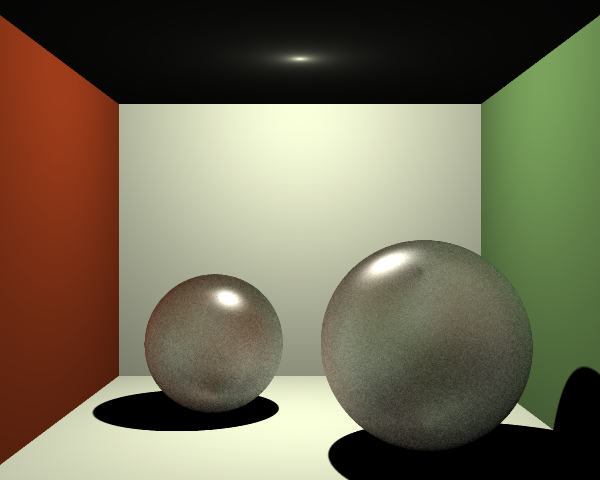

So this evening I thought I’d have a look’see at some of the more advanced ray tracing techniques that I didn’t have time to play with during my dissertation research. Namely, Depth-Of-Field and Specular/Glossy Reflections.

Essentially, Depth-Of-Field mimics the focus point of an eye or camera. Where there is a set focal distance where everything is crisp and in focus, and everything before and after the point becomes progressively more fuzzy as it gets further from that point. To perform DOF on a ray tracer we sample multiple rays for each of our original primary rays, each at a slight offset.

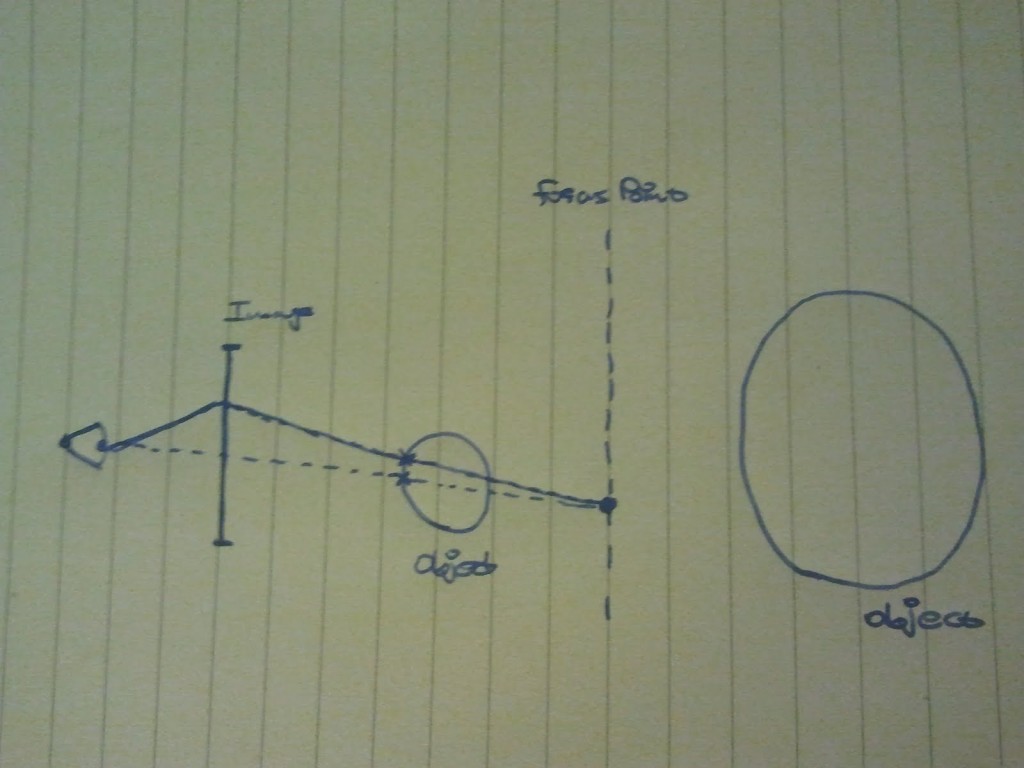

In the image below the dotted line represents the ‘true’ original ray path to the first intersection with a geometry. The solid line represents a sampled ray which has been offset from the origin by a random amount. Both rays pass through the same point in the focal plane which is exactly the focal distance away from both ray origins. Any objects at the focal plane will appear crisp and in focus while anything closer or further will appear out of focus. This can be visualized by the two rays (solid and dotted) intersections with the sphere. Each will return a different colour which when averaged will produce the blurred colour of the out of focus sphere.

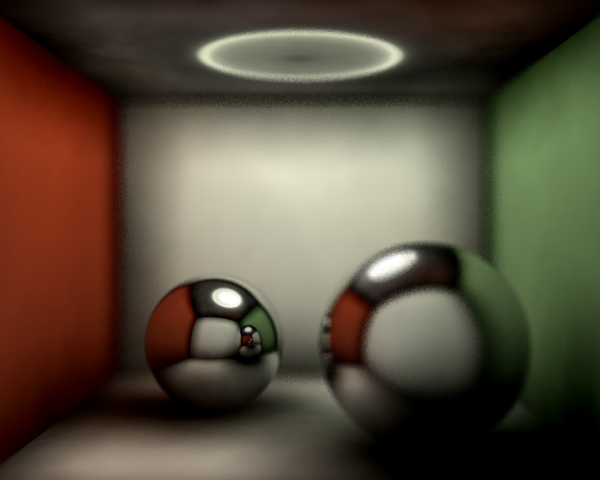

Here is the effect of a 3×3 unit aperture using 25 random samples per ray. As you would expect the render took around 25 times longer to produce the final output, which is worrying because truth be told we really need 100+ samples (closer to 300-500) to produce smooth and clear results without noise.

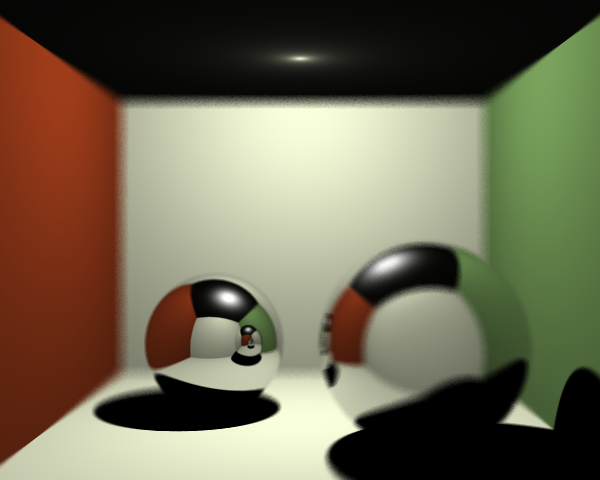

Here the quality of the DOF simulation has been upped, however I had to turn off photon mapping to accommodate the increased workload. Here we are rendering with a 3×3 unit aperture but at 100 samples per pixel.

I think tomorrow I might look at some of the more efficient ways of computing DOF. There’s a rather neat method with relies on storing the depth that each pixel is in the image and using that to apply a weighted blurring function after the image has been rendered. Cheating I know but it will be a hell of a lot faster than multiplying the entire workload of the render by an incredibly large sample size.

Finally, this evening I had a ‘brief’ (3 and a half hours of reading, coding, swearing, reading, debugging, reading, swearing, crying, and coding) go at trying to implement Glossy Reflections (otherwise known as specular reflections). Well… As you can see in the image below it all works perfectly and it wasn’t a colossal waste of time and energy…. …. …. I’ll try again another time.

Tags

Depth of Field, Global Illumination, Ray Tracing